PowerShell Pipeline

Measuring Data with PowerShell

Working with PowerShell, you can pretty much do anything and one of those things happens to be investigating a large amount of data to measure various things. Perhaps you want to find the total count of all of the data that you are looking at or want to figure out the average cost of supplies or other statistics and don't really want to load the data up in an excel spreadsheet to fun some formulas. PowerShell and do the work for you without even breaking a sweat

Using a CSV file containing Iowa Crash Data, we can analyze the data and provide some statistical information just by using PowerShell's existing commands.

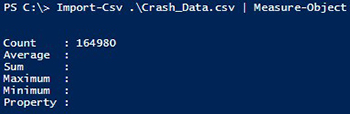

The CSV file is close to 34MB in size, so it may take a short time to download it. Once downloaded, we can determine just how many rows are in this file by importing the data and piping it to Measure-Object. This will by default list a count which represents the number of rows that have data in it.

Import-Csv .\Crash_Data.csv | Measure-Object

[Click on image for larger view.] Figure 1.

[Click on image for larger view.] Figure 1.

We can see that there are close to 165,000 rows of data in this CSV file. This should make for a great look at data and determining how often weather might have been a factor among other things. Let's grab a look at the first row of data to better understand what we are looking at.

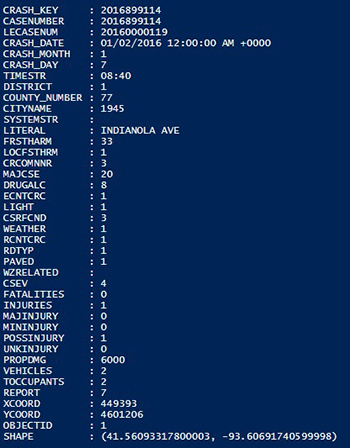

$CrashData = Import-Csv .\Crash_Data.csv

$CrashData | Select-Object -First 1

[Click on image for larger view.] Figure 2.

[Click on image for larger view.] Figure 2.

That is a lot of data for just a single row. Also, some of the columns may not be that understandable and a lot of the values have numbers in them. Typically this means that somewhere, there is a hash table or some key to value collection which can translate each of the numbers to a more human readable format. This is typical when storing data in a database to use a number that maps back elsewhere to a more human readable value.

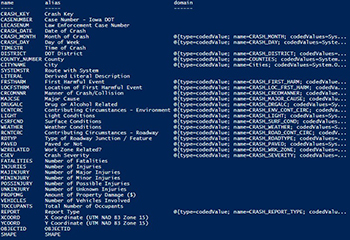

After a little bit of searching, I found the missing piece to this puzzle that identifies each column as well as the expected lookups to all of the numeric results. The link that hosts this data luckily returns the data in JSON meaning that I can use Invoke-RestMethod to pull the data down and read it easier than if I was just viewing the web page itself.

$Data = Invoke-RestMethod -Uri 'https://gis.iowadot.gov/public/rest/services/Traffic_Safety/Crash_Data/MapServer/0?f=pjson'

$Data.fields|Select-Object -Property Name, alias, Domain

[Click on image for larger view.] Figure 3.

[Click on image for larger view.] Figure 3.

Now we know what the column names are by looking at the alias. When looking at the domain, we can step into each of those hash tables to find out the numeric lookup values for items such as the weather.

($Data.fields | Where-Object {$_.name -eq 'weather'}).Domain.CodedValues

name code

---- ----

Clear 1

Cloudy 2

Fog, smoke, smog 3

Freezing rain/drizzle 4

Rain 5

Sleet, hail 6

Snow 7

Blowing Snow 8

Severe Winds 9

Blowing sand, soil, dirt 10

Other (explain in narrative) 98

Unknown 99

Not Reported 77

With this knowledge, we now know exactly the weather conditions that were reported at the time of the crash. Before we do that, let's run some measurements using Measure-Object to get an idea some averages of things like property damage and anything else that stands out to me.

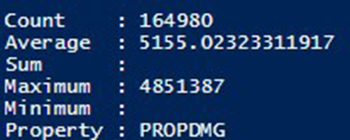

#Property Damage Average and Max

$CrashData | Measure-Object -Property PROPDMG -Average -Maximum

[Click on image for larger view.] Figure 4.

[Click on image for larger view.] Figure 4.

We see that the average amount per crash was $5155.02 while there was one crash where the property damage was $4,851,387! That is quite a bit of damage being done. That was pretty interesting, but I wonder how the weather played a factor in some of these crashes.

#Weather Related

$WeatherHash = @{}

($Data.fields | Where-Object {$_.name -eq 'weather'}).Domain.CodedValues | ForEach {

$WeatherHash.Add($_.Code,$_.Name)

}

$CrashData | Group-Object -Property WEATHER |

Select-Object -Property Count, @{L='Name';E={$WeatherHash[([int]$_.Name)]}} |

Sort-Object -Property Count -Descending

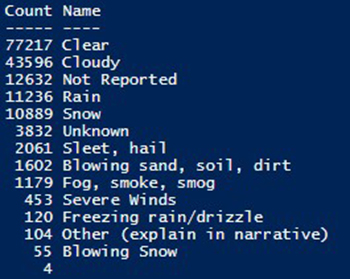

[Click on image for larger view.] Figure 5.

[Click on image for larger view.] Figure 5.

Here I made use of the JSON data to put the weather code and display name into a hash table for an easier lookup. I also used Group-Object to group everything together and then sorted the count to show the most common reasons for a crash and then the least common reasons. Weather is one thing, but I want to know if there are other environmental conditions which were reported at the time of the crash.

#Environmental Conditions

$ECNTCRC = @{}

($Data.fields | Where-Object {$_.name -eq 'ECNTCRC'}).Domain.CodedValues | ForEach {

$ECNTCRC.Add($_.Code,$_.Name)

}

$CrashData | Group-Object -Property ECNTCRC |

Select-Object -Property Count, @{L='Name';E={$ECNTCRC[([int]$_.Name)]}} |

Sort-Object -Property Count -Descending

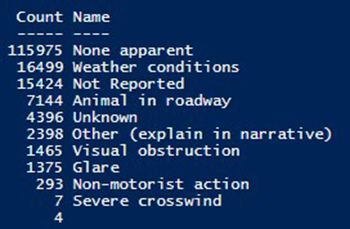

[Click on image for larger view.] Figure 6.

[Click on image for larger view.] Figure 6.

It appears that the majority of accidents didn't appear to have any environmental conditions with weather being second as a reason followed by nothing being reported and animals being on the road as the fourth most reported reason.

There is a wealth of information here that we can dig into and using PowerShell, are able to better visualize the data using Group-Object and Measure-Object. I've only scratched the surface with this data and we could continue to dig deeper by combining the grouped data by picking the largest weather condition (clear skies in this case) and determining other factors such as major cause of the crash and taking the top hit from that and determining which city had the most of the top major cause.

#Top Weather/City/Reason

#Weather

$WeatherHash = @{}

($Data.fields | Where-Object {$_.name -eq 'weather'}).Domain.CodedValues | ForEach {

$WeatherHash.Add($_.Code,$_.Name)

}

#Major Cause

$MAJCSEHash = @{}

($Data.fields | Where-Object {$_.name -eq 'MAJCSE'}).Domain.CodedValues | ForEach {

$MAJCSEHash.Add($_.Code,$_.Name)

}

#City

$CITYNAMEHash = @{}

($Data.fields | Where-Object {$_.name -eq 'CITYNAME'}).Domain.CodedValues | ForEach {

$CITYNAMEHash.Add($_.Code,$_.Name)

}

#Determine Top Weather

$TopWeather = $CrashData | Group-Object -Property WEATHER |

Select-Object -Property Count, @{L='Name';E={$WeatherHash[([int]$_.Name)]}}, Group |

Sort-Object -Property Count -Descending

#Determine Top City

$TopCity = $TopWeather[0].Group | Group-Object -Property CITYNAME |

Select-Object -Property Count, @{L='Name';E={$CITYNAMEHash[([int]$_.Name)]}}, Group |

Sort-Object -Property Count -Descending

#Determine Major Cause

$TopReason = $TopCity[1].Group | Group-Object -Property MAJCSE |

Select-Object -Property Count, @{L='Name';E={$MAJCSEHash[([int]$_.Name)]}}, Group |

Sort-Object -Property Count -Descending

[pscustomobject]@{

City = $TopCity[1].Name

Weather = $TopWeather[0].Name

Reason = $TopReason[0].Name

}

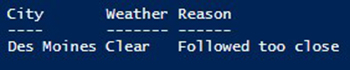

[Click on image for larger view.] Figure 7.

[Click on image for larger view.] Figure 7.

So based on our query, we can determine that the top weather condition is clear conditions and the top city which had reported the most clear conditions at the time of the wreck is in Des Moines with the number one reason for the crash was due to following too close to the car in front of them. Now you can take this data (or other data that is available) and conduct your own data analysis using PowerShell.

About the Author

Boe Prox is a Microsoft MVP in Windows PowerShell and a Senior Windows System Administrator. He has worked in the IT field since 2003, and he supports a variety of different platforms. He is a contributing author in PowerShell Deep Dives with chapters about WSUS and TCP communication. He is a moderator on the Hey, Scripting Guy! forum, and he has been a judge for the Scripting Games. He has presented talks on the topics of WSUS and PowerShell as well as runspaces to PowerShell user groups. He is an Honorary Scripting Guy, and he has submitted a number of posts as a to Microsoft's Hey, Scripting Guy! He also has a number of open source projects available on Codeplex and GitHub. His personal blog is at http://learn-powershell.net.