In-Depth

How To Size Page Files on Windows Systems

Page files can greatly affect the performance and recovery of Windows and Windows servers. Here's how to size page files to suit your computing needs.

- By Clint Huffman

- 07/05/2011

Page files can greatly affect the performance and recovery of Windows and Windows Server computers. Incorrect sizing of page files can lead to system hangs, excessive use of disk space, or the inability to create dump files during bug checks (popularly known as the blue screen of death).

Here, I'll show you how to size a page file on production 64-bit versions of Windows and Windows Server.

First: What is the page file? It's simply an extension of RAM used to store modified pages (a 4 KB unit of memory) that are not found on disk. If I type "Page files are cool!" then the text is only potential pagable memory, simply because it is the only thing in Notepad's working set that is not found anywhere on disk. Once I click Save, none of Notepad's working set is pagable because all of it is already on disk. Why write the DLL and EXE memory to the page file when it can simply be retrieved elsewhere on the disk?

The page file is commonly referred to as "virtual memory," but the term is already used to describe the virtual address space of processes, which is a different concept and can be confusing. So, I prefer to call the page file a physical extension of RAM on disk.

The first consideration for sizing page files is to look at the crash dump settings. During a crash dump, the NTFS file system is no longer available, but the operating system needs to write the memory in RAM to disk. The page file structure is on disk, so it is a logical choice to use as a way of writing to disk. The operating system deletes the contents of the page file and attempts to write the contents of RAM to the page file structure. Once written, the page file is renamed to memory.dmp and becomes the crash dump file.

The page file must be large enough to accommodate the selected crash dump setting, so it is a major consideration for sizing the page file. Windows and Windows Server have three crash dump settings, each of which require different page file sizes:

- Complete memory dump: This option is selected by default if the computer has less than 4 GB of RAM. This setting dumps the entire contents of RAM to the page file. Therefore, the page file must be the size of RAM plus 1 MB. The 1 MB is needed to accommodate the header data. This is most likely why the 1.5 times RAM page file sizing myth became popular.

- Kernel memory dump: This is the default if the computer has 4 GB of RAM or more. This setting dumps only the kernel memory. Kernel memory usage can range widely, so there is no clear page file size for it. The "Windows Internals 5th Edition" book suggests a minimum page file size of 800 MB for computers with more than 8 GB of RAM.

- Small memory dump: If a Small memory dump is selected, then a page file of 2 MB or more is needed.

The second consideration for page file sizing is to accommodate the System Commit Charge and the System Commit Limit. The system commit charge is the total amount of committed memory that is in-use by all processes and by the kernel. In other words, it is all of the memory that has been written to or "promised" and must have a physical resource (RAM or page file) behind it.

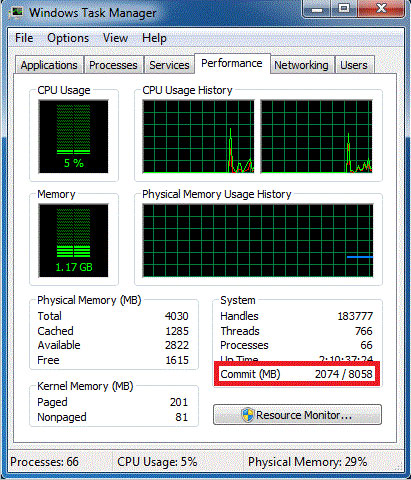

If the commit charge reaches the commit limit, then the commit limit has to be increased or the committed memory must be de-allocated. The commit limit can be increased by increasing the amount of RAM or by increasing one or more of the page file sizes. De-allocation of committed memory can only happen when the owning process willingly releases memory or when a process ends. I can quickly see the system commit limit and system commit charge by using Task Manager (see Fig. 1) or by using performance counters (see Fig. 2).

|

Figure 1. Task Manager shows the System Commit Charge (left) and System Commit Limit (right). |

Note: You can calculate the size of all of the page files by taking the system commit limit and subtracting the amount of RAM installed.

A few months ago, I came across a 64-bit Windows Server 2008 computer that had 16 GB of RAM and a 16 GB page file giving it a 32 GB system commit limit (counter values shown in Fig. 2). While this sounds like a lot, the commit limit was reached, the page file was unable to increase, and this caused the system to not allow any more memory requests -- a very bad thing. At this point, the administrator must get involved to increase the page file, increase the amount of RAM installed (hot-add RAM), wait for the process consuming the memory to de-allocate memory, or kill the processes consuming the most committed memory by using the \Process(*)\Private Bytes performance counter.

|

Figure 2. Performance counter values of a server with 16 GB of RAM and a 16 GB page file where the commit limit was too low. |

A common practice is to set the page file to a maximum size to prevent page file fragmentation. This can certainly help if the page file is used frequently. But in this case, it ultimately caused this server to fail to allocate memory, even though the system had plenty of free space on the C: drive. My advice is to allow the system to manage the page file for you initially, and then fine-tune it later if needed.

Note: The commit charge peak is a great way to estimate the amount of initial RAM that should be installed for a given server. This is very helpful when assessing the hardware requirements of a computer such as moving a physical computer to a virtual computer.

On the other extreme, I came across a 64-bit Windows Server 2003 web server with 64 GB of RAM. In this case, the administrator used the 1.5 times RAM "guideline". This resulted in a 96 GB page file giving the system a commit limit of roughly 160 GB!

|

Figure 3. Performance counter values of a server with 64 GB of RAM and a 96 GB page file where the commit limit was too high. |

The commit charge (Committed Bytes) was only about 8.7 GB at peak load, so the 160 GB of commit limit was a bit overkill. If the server will never need 160 GB of commit limit, then the only problem with this configuration is the wasted disk space. In this case, the computer's RAM and page file can be reduced to about 12 GB and still be enough to accommodate the system commit charge.

Note: This article does not cover RAM usage analysis, which is a different aspect to Windows troubleshooting. Please let me know if this is a topic you want covered.

My new laptop has a whopping 16 GB of RAM with a 128 GB solid state drive (SSD). It's a nice machine, but the 128 GB of disk space is a bit small. Normally, I would run a 1 GB page file to accommodate the Kernel memory dump, but since my commit charge is never over 8 GB, I don't need a page file. Therefore, I am running my laptop without a page file to save on the disk space.

|

Figure 4. Performance counter values of a laptop with 16 GB of RAM and no page file. |

Fig. 4 shows that my laptop has a 16 GB commit limit -- I have 16 GB of RAM and no page file. This is perfectly okay as long as I don't care about saving crash dump files or use more than 16 GB of committed memory.

In summary, you can properly size page files by following a few simple steps:

- Crash dump setting: Check your crash dump setting to see if your computer is set to "Complete memory dump" (default for computers with 4 GB of RAM or less). If so, your page file must be the size of RAM plus 1 MB or more. Otherwise, have a page file of 800 MB or more to accommodate a Kernel memory dump. If you don't care about crash dumps, then ignore this step.

- Monitor the System Commit Charge peak: Monitor your computer's commit charge to determine how much committed memory the system is using at peak. This can be monitored from the Performance tab in Task Manager or by monitoring the \Memory\Committed Bytes counter in Performance Monitor.

- Adjust the Commit Limit: Set the system commit limit to be larger than the highest monitored commit charge peak plus a little extra for room for growth. Remember, the commit limit is the sum of RAM and all of the page files. If you want to minimize paging, then try to have more RAM installed than the commit charge peak value. The commit limit on the server can be monitored either from the Performance tab in Task Manager or by monitoring the \Memory\Commit Limit counter in Performance monitor.

There are no performance counters that measure just page file reads and writes. Some articles on the Internet refer to using \Memory\pages/sec performance counter to measure page file usage, but don't be fooled: The pages/sec counter measures hard page faults, which are resolved by reading or writing to the disk. The disk activity might be the page file or elsewhere on disk, meaning it is not measuring just page file usage. For example, backup software commonly sustains about 1,000 page/sec with no involvement of the page file. Try opening up Microsoft Word on a freshly booted computer and I bet you will see about 1,000 pages/sec with no involvement of the page file.

The only way to truly see how much the page file is actively used is to gather a file system trace with tools like the SysInternals Process Monitor with the Enabled Advanced Output option enabled.

If you want to have a dump file to accommodate crash dumps, but don't want to use a page file, then consider a Dedicated dump file. This is a Windows Vista, Windows Server 2008, and later feature that allows an administrator to create a page file that is not used for paging – it is used for crash dumps only. For more information on Dedicated dump files, see the NTDebugging Blog post.

In conclusion, no one can blindly tell you how to properly size the page file on your computer. The crash dump settings, the system commit charge (total committed memory), the amount of RAM, and free disk space are all considerations for the size of the page file.

Also, check out Mark Russinovich's blog, Pushing the Limits of Windows: Virtual Memory, where he explains the relationship of virtual memory and the page file.