Windows Advisor

Put Hyper-V into Hyper-Drive

In this first of a series on Hyper-V, Paul looks at squeezing the best performance out of the hypervisor through the right mix of processing power both virtual and physical.

Picking the right hardware for your hosts and network in a new Microsoft Hyper-V implementation can be tricky, not to mention the task of measuring and monitoring performance when in production. In this series of articles, I'll look at the different components that make up a balanced underlying hardware fabric for Hyper-V, starting with processor allocation and continuing on to look at the memory, storage and network subsystems.

From there we'll delve into performance tips and tricks, choosing the right flavor of Hyper-V and common configuration gotchas and finish off with performance monitoring of VMs and how it differs from monitoring in the physical world.

Note: All recommendations apply to Hyper-V in Windows Server 2008 R2 with Service Pack 1. The new Hyper-V, version in the upcoming Windows Server 8, changes the game considerably as far as scalability limits are concerned but that's a topic for another series of articles. The advice I offer here applies only to the most recent Windows version available as of this article posting.

Virtual & Logical Processors

There's often a misconception among IT admins I talk to about what virtual processors and logical processors are, and how they affect the maximum number of VMs on a given physical host. This is directly related to the amount of physical memory of each host (which we'll cover next time) as well as the number of processors assigned to VMs.

An LP is a core in a multi-core processor, so a quad core CPU has four LPs. If that quad core has Hyper Threading, it will appear as eight cores, which means your system has eight LPs. While this is how Microsoft's documentation talks about LPs, be aware that HT doesn't magically double the processor capacity. To be on the safe side, just count cores as LPs -- don't double it when you have HT turned on.

VPs are what you assign to individual VMs and how many you can assign is dictated by the guest/VM operating system. Newer is more capable in this case, so Windows 2008/2008 R2 can work with four VPs, whereas Windows Server 2003 can only be assigned one or two VPs. SuSE Linux Enterprise, CentOS and Red Hat Enterprise Linux (all supported versions of these OSs) can be assigned up to four VPs. If you're running client operating systems in a VDI infrastructure, Windows 7 can work with up to four VPs, Vista can see two and Windows XP SP3 can see two VPs. More detailed information is available here.

Just because you can assign two or four VPs to a particular VM doesn't mean you should. First of all, there's some overhead in any multiprocessor system, whether physical or virtual because of cross-processor communication. But the penalty is smaller in newer OSes, so Windows 2008 R2 VMs will be fine with four VPs whereas Windows Server 2003 might require some testing to see if there are benefits with two VPs in your particular situation. Secondly, it all depends on the workload -- some applications are heavily multithreaded (think SQL Server and the like) and will thrive on several VPs whereas single-threaded applications or those with only a few threads won't benefit much.

Another common misconception is that assigning one or more VPs to a VM has a correlation to physical cores. Think of it more like giving a VM a chunk of scheduled CPU time, with the hypervisor actually spreading the load of running VMs across all available CPU cores.

The number of VPs assigned to a VMs on a particular host ties in with Microsoft's recommendation to have no more than four VPs per LP in a system, with a maximum of eight VPs to LPs. The exception: If you have all Windows 7 VMs in a VDI scenario, the maximum supported ratio is 12.

If you have a Hyper-V host with two quad core CPUs (=eight LPs) you are safe to have eight VMs running, each with four VPs (=32 VPs total) and a maximum of 16 VMs (=64 VPs total). If you only assigned two VPs to each VM, you could double those numbers in this artificial example where each VM is identical. In the real world, of course, the number of VPs will vary between VMs based on the workload inside it.

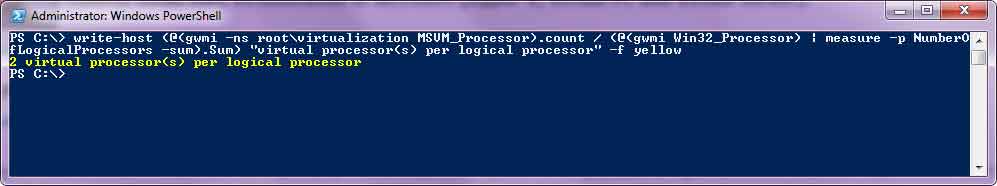

To check the ratio on your hosts you could manually look at each VM that's running and add up the total number of assigned VPs, which isn't very efficient. A better way is to run this simple PowerShell cmdlet which will give you the answer:

write-host (@(gwmi -ns root\virtualization MSVM_Processor).count / (@(gwmi Win32_Processor) | measure -p NumberOfLogicalProcessors -sum).Sum) "virtual processor(s) per logical processor" -f yellow

Thanks to Ben Armstrong, Virtualization Program Manager at Microsoft for this one liner.

Fig. 1 shows the value on my quad core laptop with HT enabled (=8 LPs), with four VMs running, each with four VPs.

|

Figure 1. Easily pinpoint the VP to LP ratio on your Hyper-V hosts with this simple cmdlet.(Click image to view larger version.) |

It's important to have an understanding of the workloads and applications you're going to be running in each VM: Are they CPU bound or memory bound? Do they benefit from multithreading and, thus, from additional VPs?

Make sure the processors you're investing in support Second Level Address Translation (SLAT), which Intel calls Extended Page Tables (EPT) and which AMD calls Rapid Virtualization Indexing (RVI; earlier documentation from AMD called this Nested Page Tables (NPT)). Older processors that don't support SLAT means each VM will occupy an extra 10 to 30 MB of memory and processor utilization will increase by 10 percent or more.

Depending on your workload, SLAT can bring tremendous benefits. If you're virtualizing Remote Desktop Services you might see up to 40 percent more sessions with SLAT processors. Processors with large L2 and L3 cache will also help workloads with large memory requirements.

|

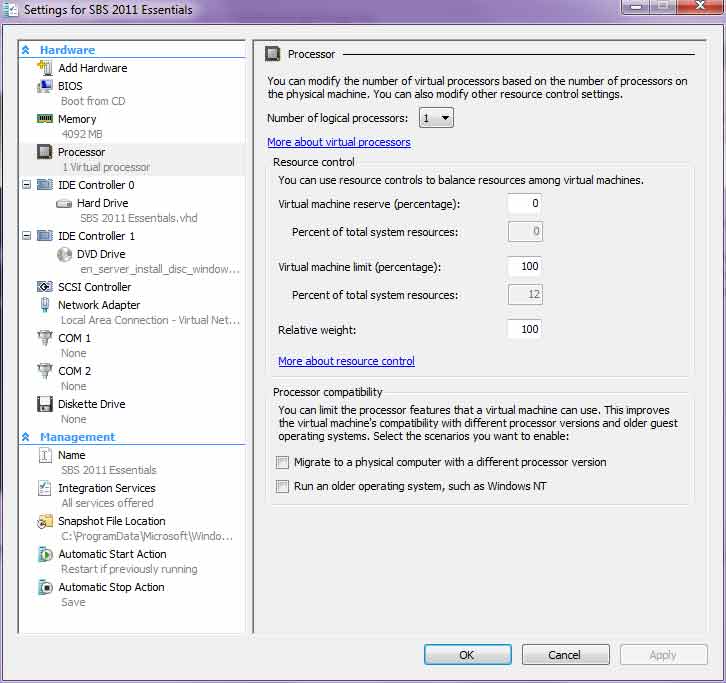

Figure 2. Assigning Virtual Processors to a VM is easy; just pick from the list. (Click image to view larger version.) |

Finally if you have a host where there's limited CPU resources you can alter the balance between VMs with the VM reserve setting that guarantees that this amount of CPU resources will always be available to the VM (but might limit the total number of VMs that can run on the host) as well as the VM limit setting that controls how much of the assigned processor capacity it will use. The Relative weight balances this VM against other running VMs, a lower value means it will receive less resources in times of contention. Microsoft's recommendation is to leave these settings alone unless there's a compelling reason to change them.

There are also processor compatibility settings that let you move VMs between hosts that have different generations of processors as well as letting you run ancient OSs such as Windows NT.

Next time, we'll look at networking, memory and storage planning considerations for a Hyper-V deployment.

About the Author

Paul Schnackenburg has been working in IT for nearly 30 years and has been teaching for over 20 years. He runs Expert IT Solutions, an IT consultancy in Australia. Paul focuses on cloud technologies such as Azure and Microsoft 365 and how to secure IT, whether in the cloud or on-premises. He's a frequent speaker at conferences and writes for several sites, including virtualizationreview.com. Find him at @paulschnack on Twitter or on his blog at TellITasITis.com.au.